Projects

Hosting my Portfolio Website

- Hosting a static website on AWS S3 bucket without making it public.

- Saved all the front-end files on an S3 bucket, and then created an AWS Cloudfront network and gave it access to the bucket's contents.

- Purchased a URL from AWS Route53, got a certificate for it from AWS Certificate Manager

- Attatched that certificate and the URL to the cloudfront network.

- Built a CI/CD pipeline using Github Actions, as it will make it easier to make any changes in the website.

github repo: https://github.com/ViditNaithani22/viditnaithani-resume

Website link: https://www.viditnaithani.com

ExploreCamps

- A full-stack website to review campgrounds created by others all over the world and add your own campgrounds for others to review.

- Front-end created using HTML, EJS, CSS, and Bootstrap 5.

- The database used is MongoDB Atlas to store campgrounds, users, reviews and sessions.

- Node.js and Express.js are used for server side application.

- For user authentication Passport.js is used.

- For the authentication of the data entered by the user Joi Schemas are used.

- All images are uploaded to Cloudinary via Multer.

- All the maps are taken from MapBox API.

github repo: https://github.com/ViditNaithani22/ExploreCamps

Website link: https://explorecamps.onrender.com/

CutePots

- A React e-commerce web application to sell planter pots.

- Hosted via AWS Amplify Gen 2.

- AWS Cognito is used for authentication.

- This project effectively displays the use of state, passing it from parent to child as props, and using context api to provide state to multiple children all at once

- Used react-router-dom to create routes for different components.

github repo: https://github.com/ViditNaithani22/amplify-vite-react-template

Website link: https://main.d1dtpis7gcvsz7.amplifyapp.com/

fileShareMe

- A Node.js web application just like Google Drive where you upload files and generate password protected links to share and download them.

- Implemented text file compression using the Huffman algorithm, reducing file sizes by up to fifty percent.

- Leveraged MongoDB Atlas for efficient data storage and it is hosted on AWS EC2 with PM2.

github repo: https://github.com/ViditNaithani22/fileShareMe

Website link: http://23.20.146.219

Outdated Comment Detection (Capstone Project)

- A tensorflow dual encoder model that is trained to detect outdated comments in Java code bases

- Trained a fasttext model to generate embeddings for all the code-comment change samples in our dataset.

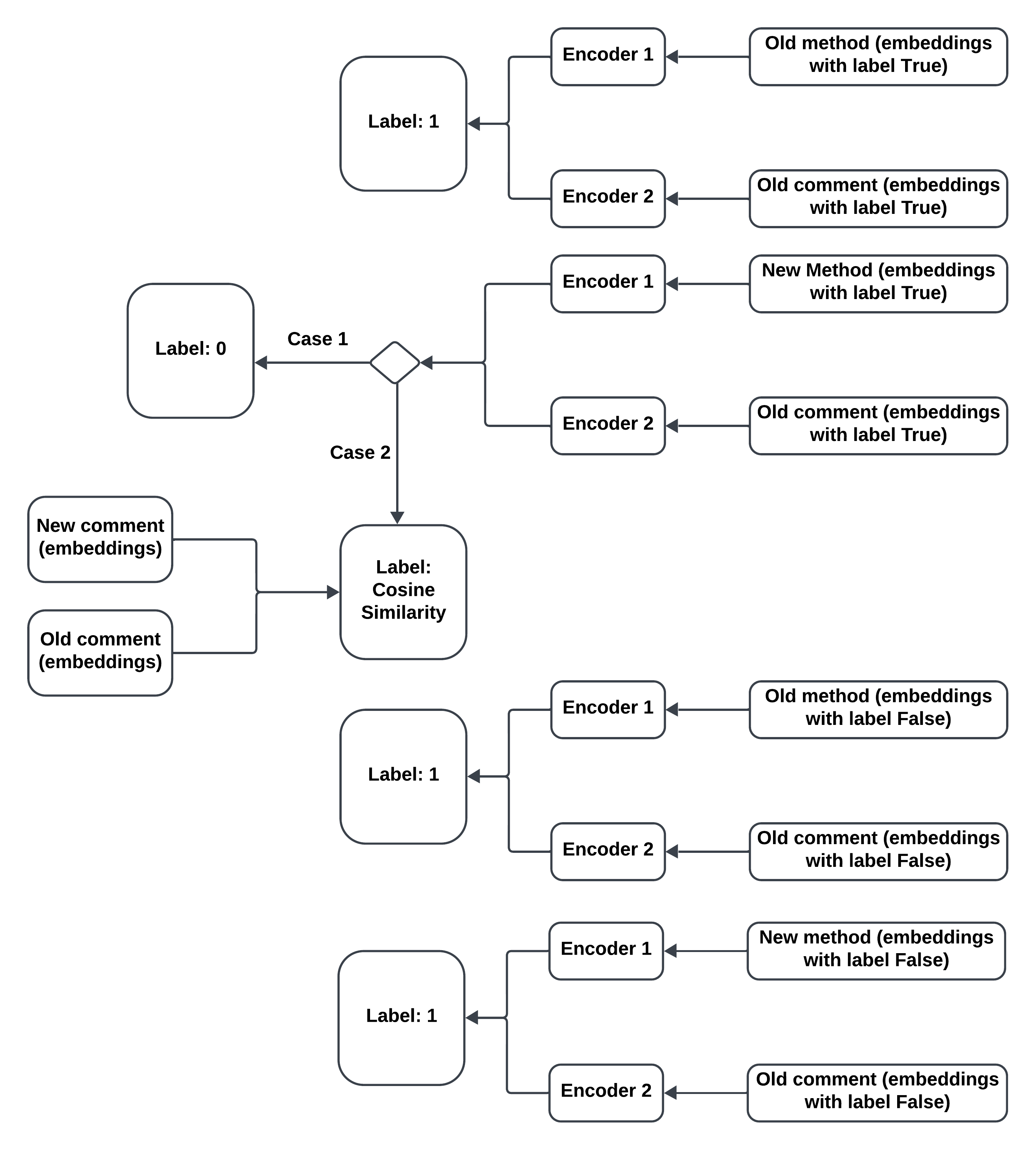

- First we train the two encoders to consider the cosine similarity between old_comment (comment in previous commit) and old_method (method in previous commit) as 1

- Case 1: train the two encoders to consider the cosine similarity between old_comment and new_method (method in new commit) as 0

- Case 2: train the two encoders to consider the cosine similarity between old_comment and new_method as the cosine similarity between old_comment and new_comment (comment in new commit)

- Results: Rank-error=0.3564, F1-Score=0.026, Recall=0.019, Precision=0.042

- Imp. Libraries used: Tensorflow, Numpy, Pandas, FastText, Keras

github repo: https://github.com/hil-se/OutDatedCommentDetection

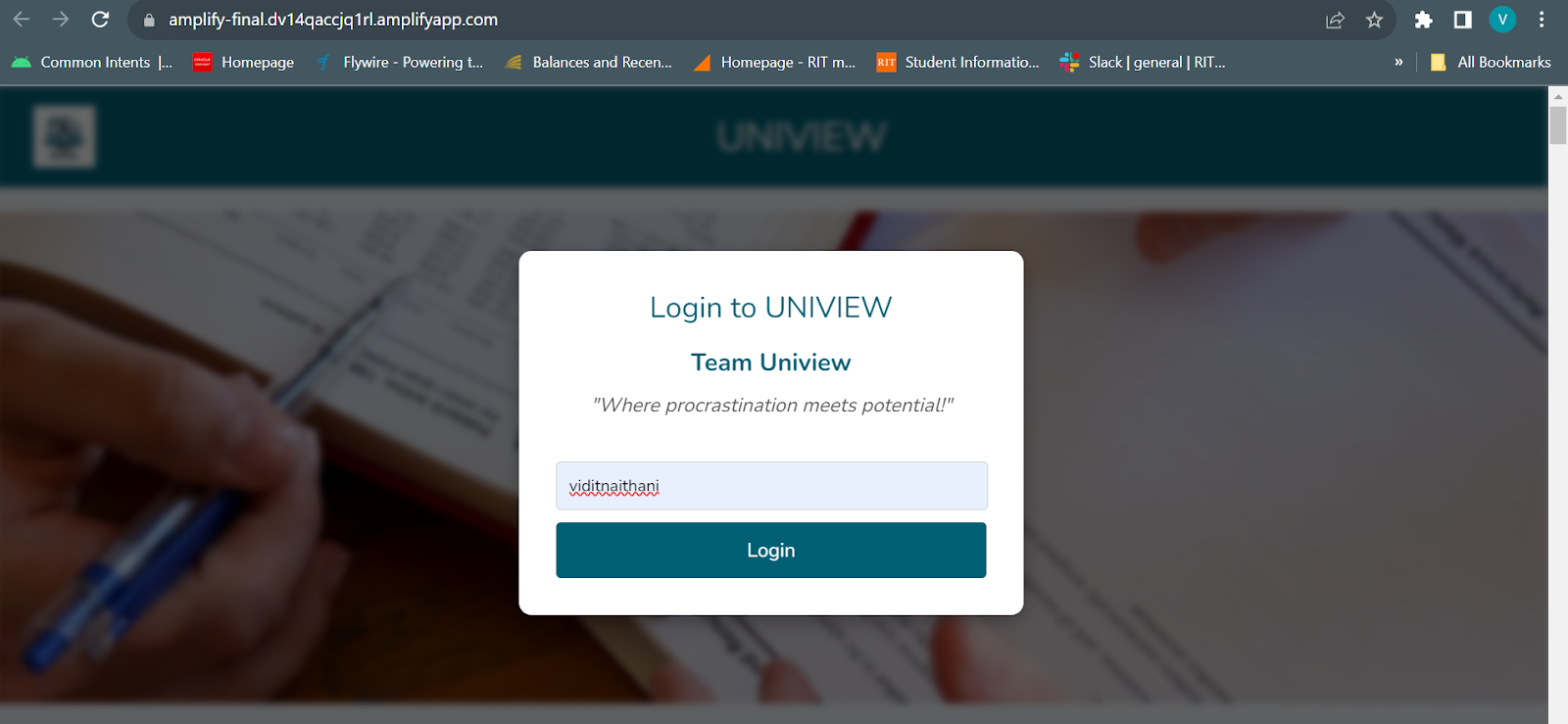

Uniview (SWEN614 Group Project)

- Uniview gives you a summary of google maps reviews for a particular university that you are interested in.

- Utilizes AWS Lambda to fetch and filter 1,500 reviews from the google maps profile of 100 different universities.

- Performs sentiment analysis on each review using AWS Comprehend and uses AWS Personalize to recommend 5 similar universities for each university.

- Implements TF-IDF NLP algorithm to extract 10 most common words from positive and negative reviews.

- The entire project was built on Terraform. Other services used: EC2, Amplify, S3, Cloudwatch, DynamoDB.

github repo: https://github.com/ViditNaithani22/Uniview-terraform

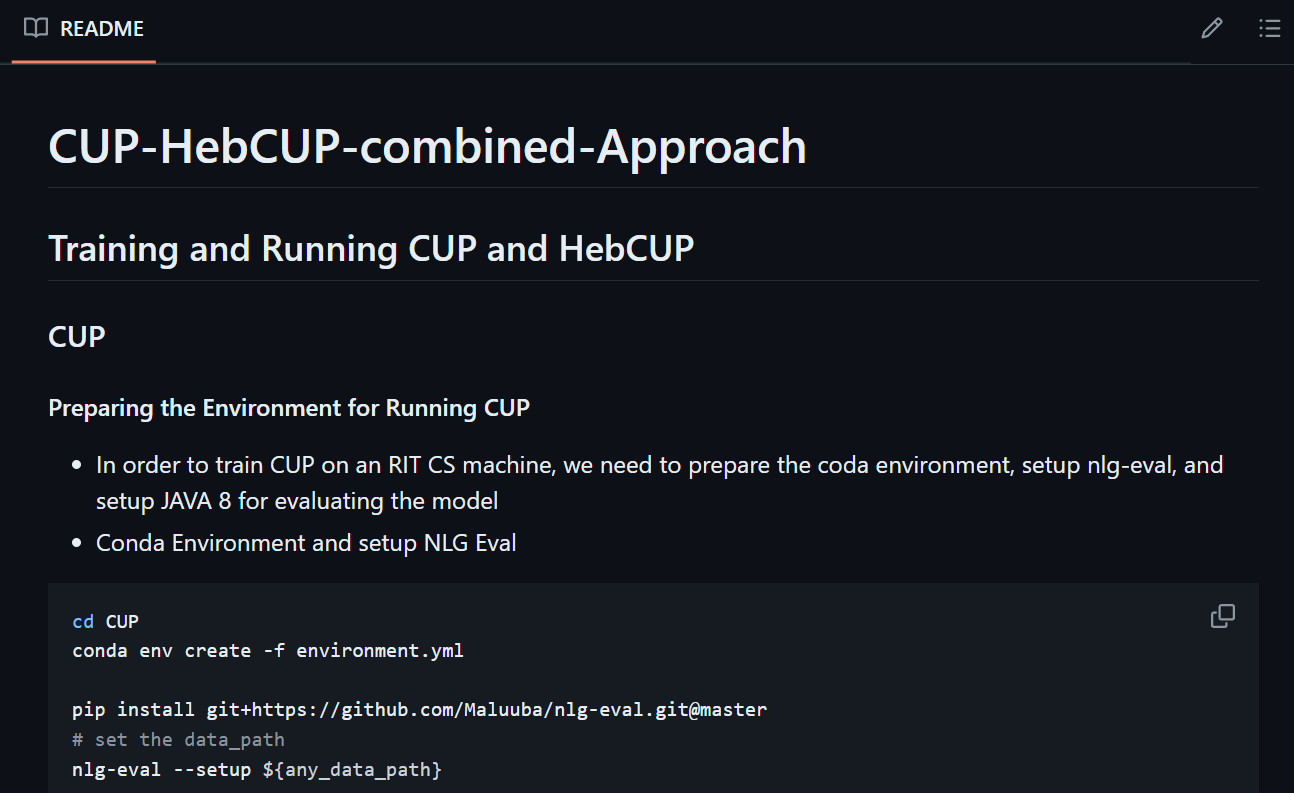

CUP+HebCUP (CSCI635 Group Project)

- CUP is a Seq2Seq model and HebCUP is a heuristic-based approach, both are used to update outdated comments.

- We divided 1,063K code-comment pairs between CUP and HebCUP labels based on the NCSM scores.

- We aim to update each code-comment pair based on its label and compare our performance with using either CUP or HebCUP alone on the entire dataset.

- Imp. Libraries used: tensorflow, numpy, keras, pytorch, cudatoolkit, cudnn, scikit-learn, scipy

github repo: https://github.com/ViditNaithani22/CUP-HebCUP-combined-Approach